Using SAM-2 and PIL to automate my lil Duographs

Note for digital viewing: These images will probably be being quantised by your browser in a way that's a bit hostile to the halftone patterns. Try zooming in and out - the patterns on the images might change. Viewing the images directly in a new tab will show them properly.

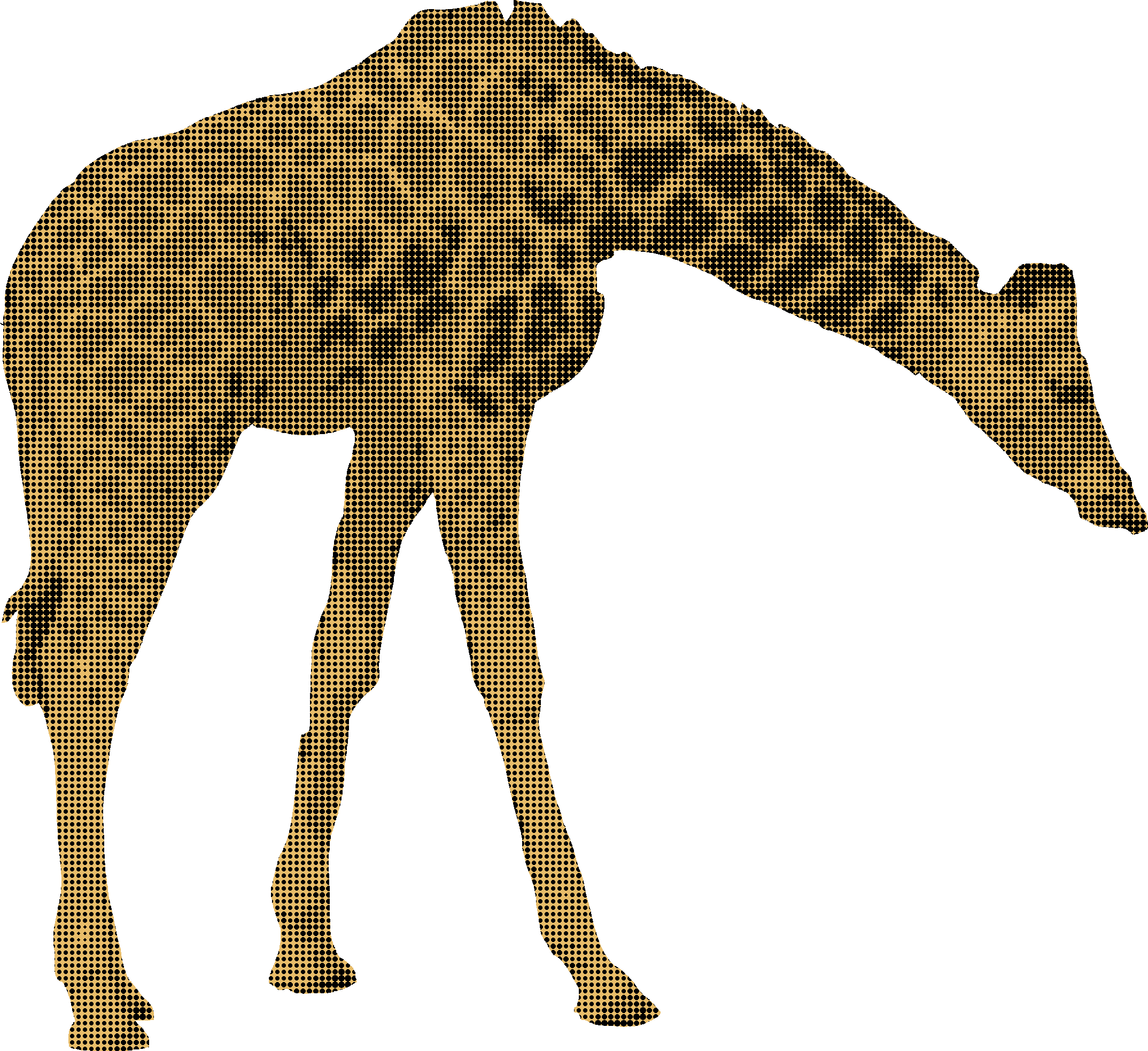

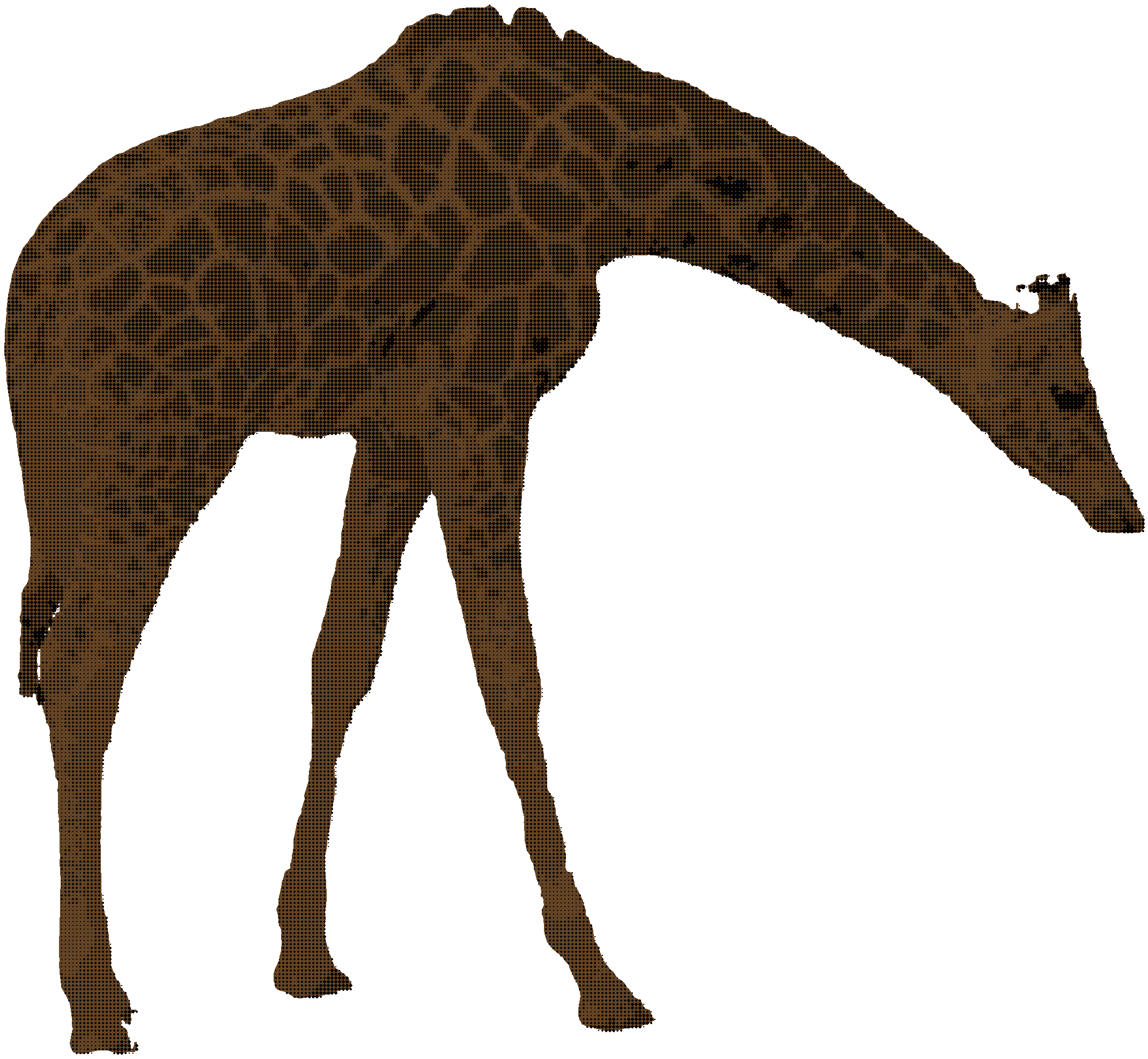

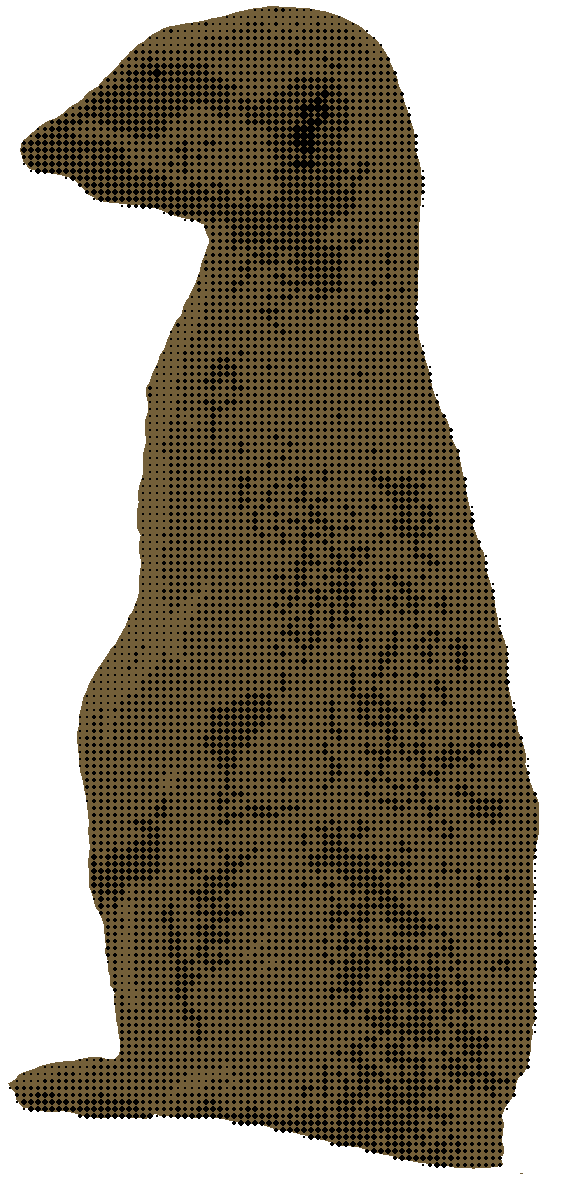

I've been going down a little rabbit hole of old print techniques with an eye toward recreating their aesthetic digitally for this site. The animals in the sidebar are duographs I made with a short python script to do grayscale halftoning applied over a mask I made manually in GIMP with a flat background colour. Here's an example:

Anyway, I decided that wouldn't cut it - I'd only made a few and it only took me a few minutes each - but the role of a programmer is to take occasional few minute tasks and spend a few hours attempting to automate them before giving up or being met with a slight efficiency increase (usually not enough to justify the investment).

So I looked for an existing desktop wrapper for sam-2 which I could repurpose, and ended up cloning this github repo (luca-medeiros/lang-segment-anything) and got to work.

The Plan

I needed a few things:

One: a way to programmatically get a mask for a particular part of an image (SAM-2 can do this for me, especially when it's grounded)

Two: a way to find the dominant colour in an image (average won't look too great - I will show some examples of this) for the flat background color

Three: a way to programmatically generate a halftone of an image (I already had this - python script averaging areas out and placing circles of varying radius by the computed brightness)

Four: a way to combine the mask, a flat background color, and the generated grayscale halftone without needing to use GIMP or similar

Five: a user interface to perform this on arbitrary images without needing to touch the command line (e.g. a web interface for my needs)

Six: the flexibility to implement batch processing in the future if I want to do this with a bunch of images (solution should be re-usable, perhaps a module/library)

Three was already solved, but one was the main concern (at least initially - it wasn't clear that SAM-2 would be too good at this). Helpfully, the repo authors of lang-segment-anything included an example snippet which predicts a mask from an image and a prompt but says nothing about the output format or further processing. Running the snippet and checking the keys on the prediction result reveals six things of potential interest: 'scores', 'labels', 'boxes', 'masks', 'mask_scores'. 'masks' seems particularly juicy, and the high dimension size suggests this is meant to be used as (or to produce) a visual overlay for the original image (just what we want! we can use this to cut the images out like the original online segment-anything demo). I got a little stuck here and did consult an LLM which helped me figure out that the mask is a map of the input to confidence values related to the prompt thresholded by the model's existing wrapper and kept in a range between zero and one. Using numpy to pull these values out into an array and multiplying the individual values by 255 gives us the full black-white range that we want for our mask. PIL has a fromarray method we can use to automagically create our mask image. Example from this stage here:

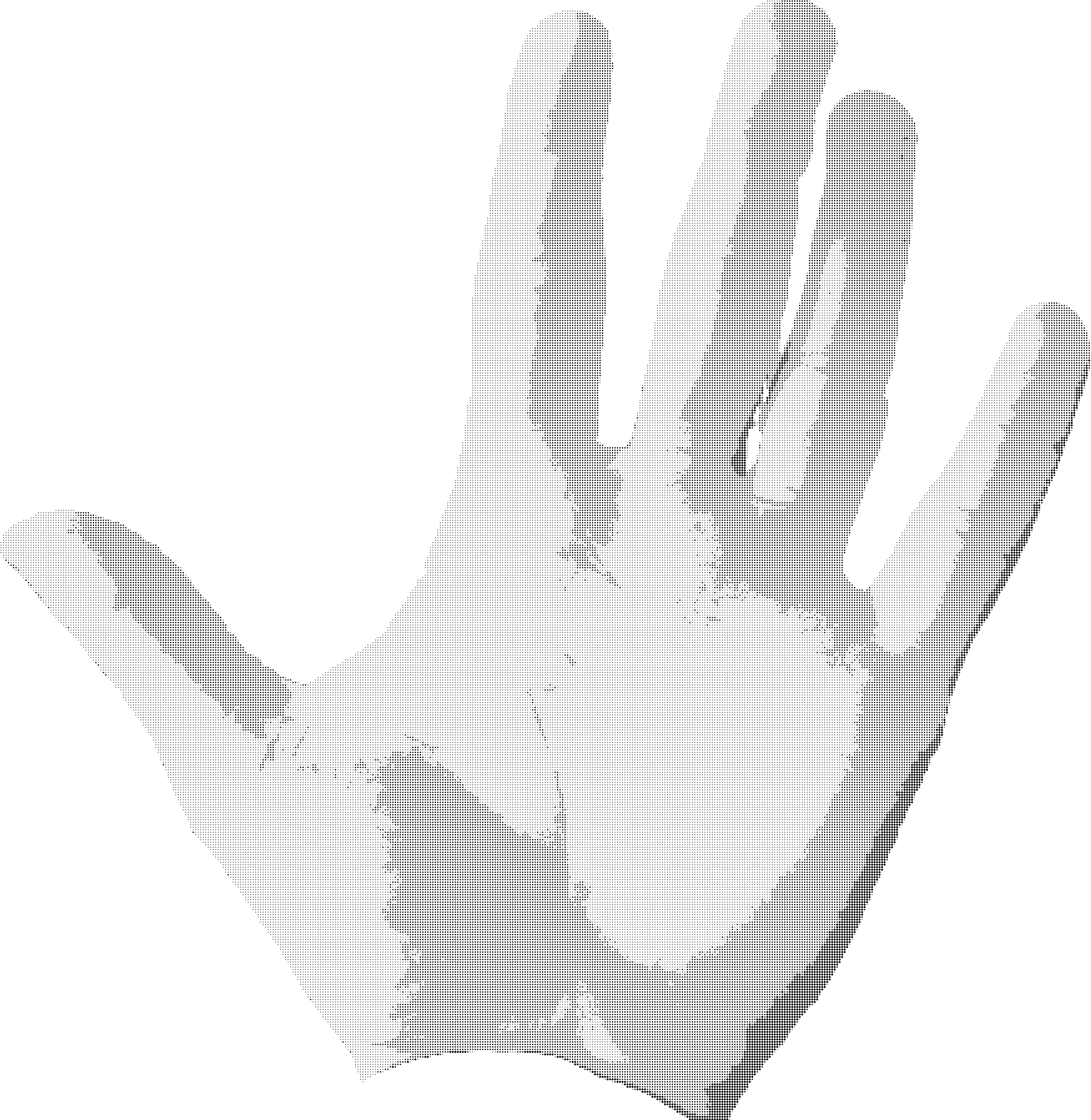

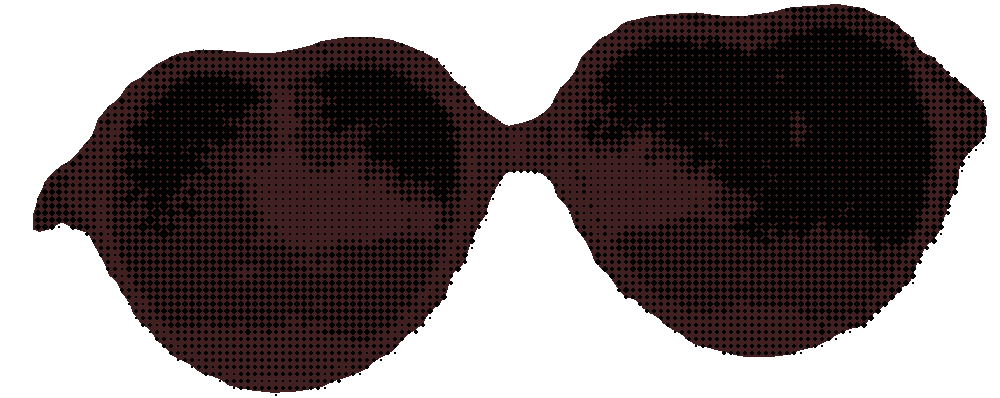

That's all we need for now! Smooth sailing from here out. Now we just need to use this to clip a generated halftone (or the image before creating a halftone) and we're almost done. PIL has a paste function exposed on its Image class that can take mask in the black-white format we have already, so if we just create a blank image and paste a halftone we generated from the original image with the mask we have above we can get an image like the following:

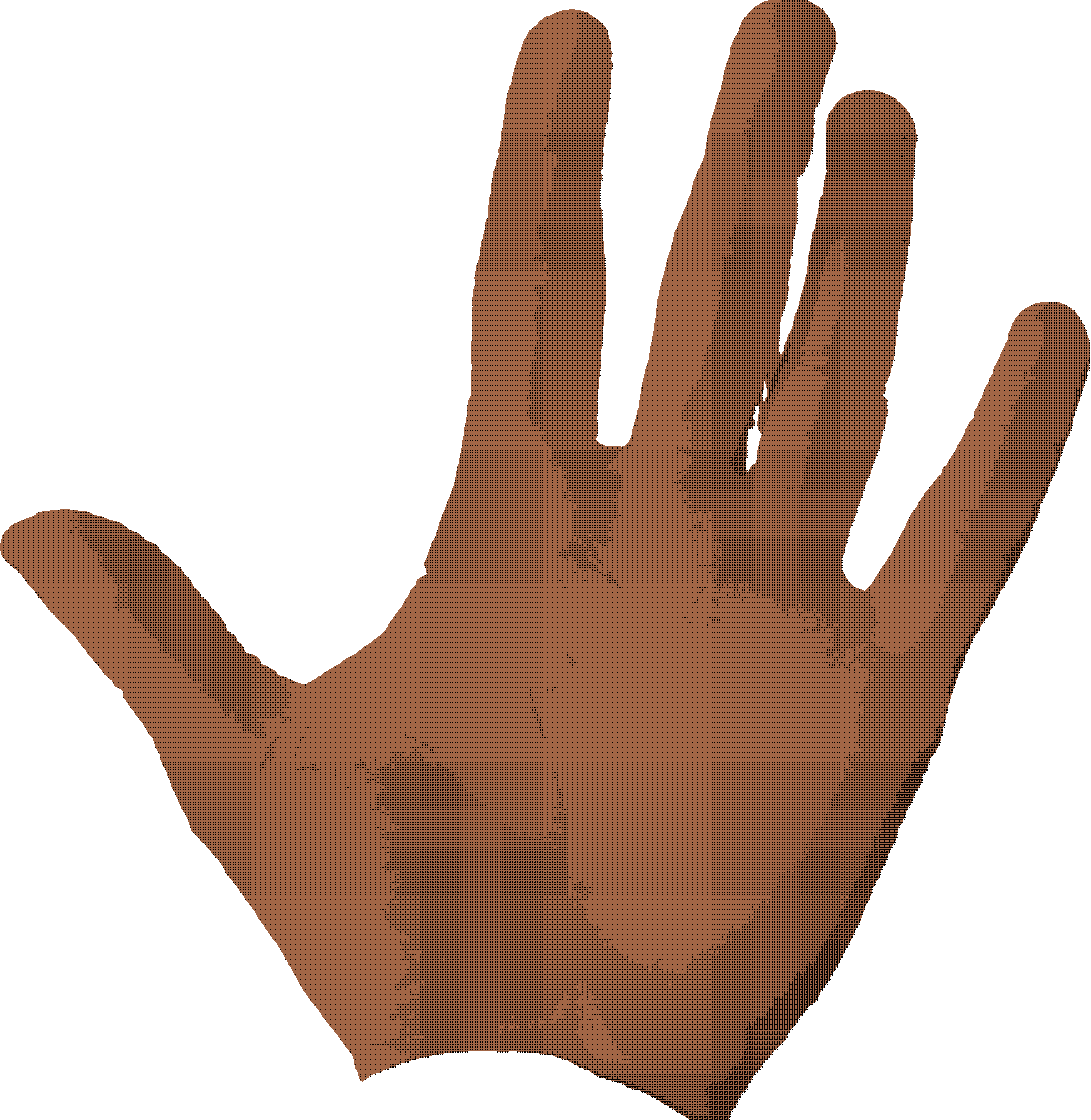

The only thing missing now in relation to the manually masked image (the Giraffe from the start) is the background color. My initial thought was to average the colours in the image after the mask has been applied, but this results in a colour far too dark since the halftoning is grayscale and pulls the overall brightness down (stackoverflow answer convincing me not to do this - I tried it anyway and it wasn't great). Instead I went with grabbing the dominant colour via kmeans clustering (discussed in the stackoverflow answer above - it has some fun graphics, too), creating a blank image with the desired colour, and pasting it onto a new blank image with the mask as a mask (go figure). Increasing the saturation there and placing a halftone on top creates a reasonable duograph. Great! Here's where we're at:

Note: my skin tone is considerably lighter than the above image might suggest - this isn't great at accurate colour reproduction for most of my test cases but it works well for things like giraffes (and who cares what my skin tone looks like in a stylised duograph).

Speaking of Giraffes, here's the same giraffe image I manually converted from before but processed automatically (I didn't make the mask):

It's not quite as accurate or smooth as the manual one and the background colour choice could still do with some tweaking, but I think that's > 95% of the way there to the extent I'd be happy using these on my site. And I will be tweaking it.

Here are some other images from this process:

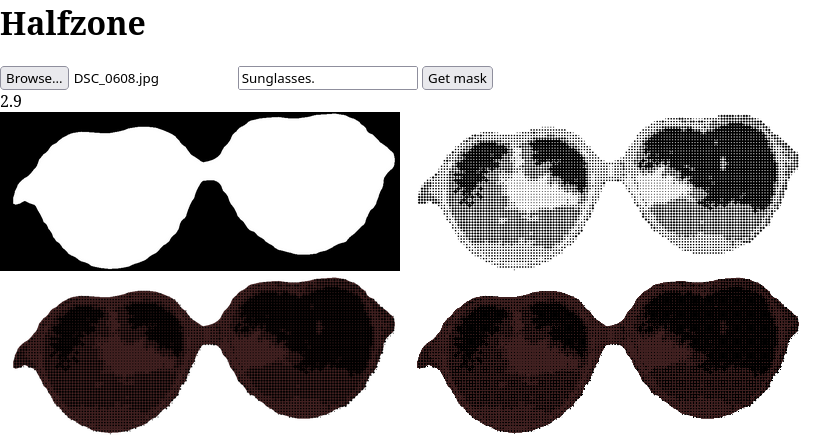

Anyway. The really last part of this is probably that I made a small flask-based web frontend for this so I can upload images and do all of this processing from the comfort of my browser with easy access to the intermediates if I want to do editing in another program. Here's what that ended up looking like (it's only for me [local only for the moment too]; so I didn't bother with styling):

(for pook):

![]()

Good fun :)

Disclaimer: I do not support Meta, I just think the SAM is cool and useful. Meta hasn't received a cent from me for my usage of it, and I oppose their recent moderation changes to explicitly allow selective abuse toward already marginalised communities on their platforms.